I hope you enjoyed the Easter weekend. I have teamed up today with Chris Collins, a senior IT Finance manager and former colleague. Our final post on metrics is on unit costing — on which Chris has been invaluable with his expertise. For those just joining our discussion on IT metrics, we have had 6 previous posts on various aspects of metrics. I recommend reading the Metrics Roundup and A Scientific Approach to Metrics to catch you up in our discussion.

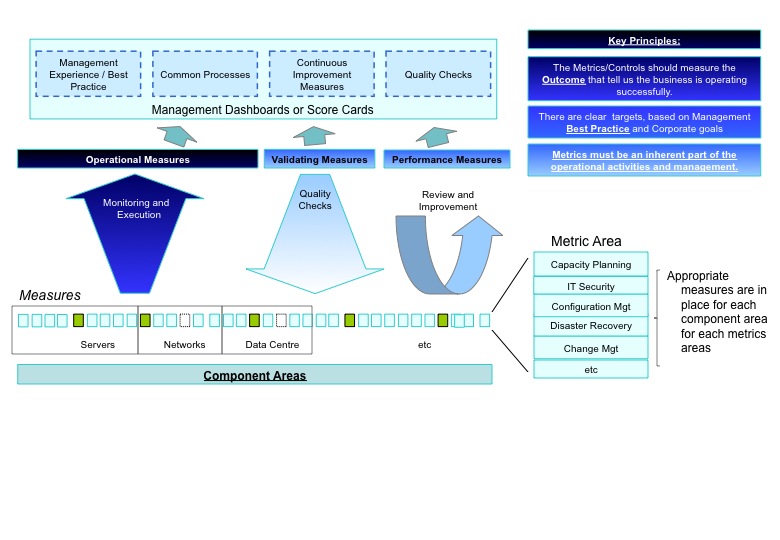

As I outlined previously, unit costing is one of the critical performance metrics (as opposed to operational or verification metrics) that a mature IT shop should leverage particularly for its utility functions like infrastructure (please see the Hybrid model for more information on IT utilities). With proper leverage, you can use unit cost and the other performance metrics to map a trajectory that will enable your teams to drive to world-class performance as well as provide greater transparency to your users.

For those just starting the metrics journey, realize that in order to develop reliable sustainable unit cost metrics, significant foundational work must be done first including:

- IT service definition should be completed and in place for those areas to be unit costed

- an accurate and ongoing asset inventory must be in place

- a clean and understandable set of financials must be available organized by account so that the business service cost can be easily derived

If you have these foundation elements in place then you can quickly derive the unit costing for your function. I recommend partnering with your Finance team to accomplish unit costing. And this should be an effort that you and your infrastructure function leaders champion. You should look to apply a unit cost approach to the 20 to 30 functions within the utility space (from storage to mainframes to security to middleware, etc). It usually works best to start with one or two of the most mature component functions and develop the practices and templates. For the IT finance team, they should progress the effort as follows:

- Ensure they can easily segregate cost based on service listing for that function

- Refine and segregate costs further if needed (e.g., are there tiers of services that should be created because of substantial cost differences?)

- Identify a volume driver to use as the basis of the unit cost (for example, for storage it could be terabytes of allocated storage)

- Parallel to the service identification/cost segregation work, begin development of unit cost database that allows you to easily manipulate and report on unit cost. Specifically, the database should contain:

- Ability to accept RC and account level assignments

- Ability to capture expense/plan from the general ledger

- Ability to capture monthly volume feeds from source systems including detail volume data (like user name for an email account or application name tied to a server)

For the function team, they should support the IT Finance team in ensuring the costs are properly segregated into the services they have defined. Reasonable precision of the cost segregation is required since later analysis will be for naught if the segregations are inaccurate. Once the initial unit costs are reported, the function technology can now begin their analysis and work. First and foremost should be an industry benchmark exercise. This will enable you to understand quickly how your performance ranks against competitors and similar firms. Please reference the Leveraging Benchmarkspage for best practices in this step. In addition to this step, you should further leverage performance metrics like unit cost to develop a projected trajectory for for your function’s performance. For example, if your unit cost for storage is currently $4,100/TB for tier 1 storage, then the storage team should map out what their unit cost will be 12, 24, and even 36 months out given their current plans, initiatives and storage demand. And if your target is for them to achieve top quartile cost, or cost median, then they can now understand if their actions and efforts will enable them to deliver to that future target. And if they will not achieve it, they can add measures to address their gaps.

Further, you can now measure and hold them accountable on a regular basis to achieve the proper progress towards their projected target. This can be done not just for unit cost but for all of your critical performance measures (e.g., productivity, time to market, etc). Setting goals and performance targets in this manner will achieve far better results because a clear mechanism for understanding cause and effect between their work and initiatives and the target metrics has been established.

A broad approach to also potentially utilize is to establish a unit cost progress chart for all of your utility functions. On this chart, where the y axis is cost as a percentage of current cost and the x axis is future years, you should establish a minimum improvement line of 5% per year. The rationale behind this is that improving hardware (e.g., servers, storage, etc) and improving productivity, yield an improving unit cost tide of at least 5% a year. Thus, to truly progress and improve, your utility functions should well exceed a 5% per year improvement if they are below 1st quartile. This approach also conveys the necessity and urgency of not sitting on our laurels in the technology space. Often, with this set of performance metrics practices employed along with CPI and other best practices, you can then achieve 1st quartile performance within 18 to 24 months for your utility function.

What has been your experience with unit cost or other performance measures? Where you able to achieve sustained advantage with these metrics?

Best,

Jim Ditmore and Chris Collins